Learning to Think Fast and Slow for Visual Language Models

By Chenyu Lin

Teaching Visual Languege Models to Think Fast and Slow.

Humans don’t approach every problem with the same thinking level of effort. When the answer is obvious, our brain reacts instantly — recognizing a face, reading a sign, noticing an emotion. But when the problem becomes abstract or ambiguous, like solving geometry or interpreting a tricky chart, we slow down and begin to reason carefully. Psychologists describe this difference using two complementary thinking systems:

- System 1 — fast, intuitive, effortless. It works automatically, based on perception and experience.

- System 2 — slow, analytical, deliberate. It activates only when we need deeper reasoning.

Crucially, the human mind does not choose these systems randomly. We switch between them depending on the complexity of the task, saving effort where we can and investing it where we must. This simple efficiency principle allows humans to think both quickly and carefully without conscious control. If visual language models are meant to reason like humans, they should adopt the same principle—thinking fast on simple problems and slowing down only when deeper reasoning is truly needed.

What is DualMindVLM#

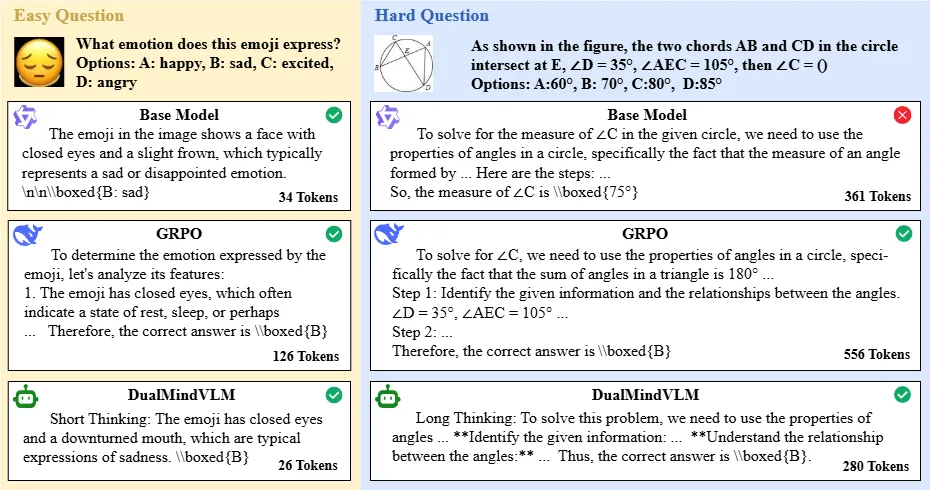

To bring this dual-thinking principle into multimodal reasoning, we introduce DualMindVLM, a visual language model designed to think fast and slow depending on the difficulty of the task. Unlike existing reasoning-oriented VLMs—most of which rely solely on System-2-style chain-of-thought generation—DualMindVLM does not treat every question as a complex problem. Instead, it automatically chooses whether to answer concisely or reason step by step, just as humans do. The example below illustrates this contrast clearly:

For a simple emoji recognition question, the System-2-only model writes a long reasoning chain to justify an obvious answer, while DualMindVLM responds directly and efficiently. On the other hand, when facing a challenging geometry task, DualMindVLM automatically activates slow, structured reasoning to solve the problem correctly.

How DualMindVLM works#

DualMindVLM achieves automatic mode switching through two simple but innovative stages:

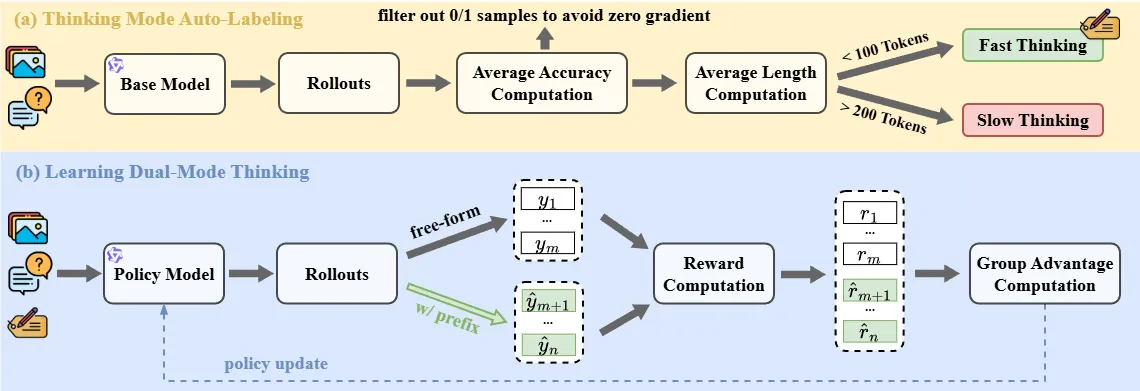

Stage-1: Thinking Model Auto-Labeling Using the Base Model’s Output Length#

Instead of relying on costly annotation pipelines—such as human labeling or using another large model (e.g., GPT-4o) to determine whether a problem is “easy” or “hard”—DualMindVLM makes use of a natural signal: the length of answers generated by the pretrained model itself. We find that the pretrained model naturally give short answers to simple perception questions and longer ones to problems that demand real reasoning. By measuring response length across a few trial outputs, we can automatically label each sample as fast- or slow-thinking. This gives DualMindVLM a clean separation of thinking modes with no extra annotations or auxiliary models, turning difficulty labeling into a self-supervised signal grounded in what the model itself finds easy or hard.

Stage-2: Dual-Mode Reinforcement Learning with Hybrid Sampling#

Once samples are labeled as easy or hard, the model must not only learn how to respond appropriately but eventually learn to make that choice on its own. We achieve this with a simple RL strategy: during training, half of the responses are forced to follow the labeled thinking mode through the short or long thinking prefix, while the other half are generated freely with no hints, letting the model decide on its own. Both types share the same reward objective, so over time the free-form responses learn to behave like the guided ones. As a result, the model acquires the ability to choose the appropriate thining style by itself—learning not only how to think, but when to think.

Why DualMindVLM Matters#

-

Learning from its own behavior. DualMindVLM leverages the base model’s output length as a difficulty signal, eliminating the need for human labels or auxiliary teacher models. This produces a reliable supervision signal at “zero” annotation cost, and the model’s length preference naturally aligns with the self-sampling dynamics of RL, making it a particularly effective fit for training.

-

State-of-the-art performance with significantly fewer tokens. While competing reasoning-oriented VLMs rely on uniformly long outputs, DualMindVLM achieves comparable or better accuracy with roughly 40% fewer tokens on average. By thinking briefly on easy tasks and reasoning thoroughly only when needed, it brings both accuracy and efficiency, rather than trading one for the other.

-

Dual-mode thinking helps reduce hallucination. The ability to automatically choose between fast and slow thinking modes also shows promise in mitigating hallucinations. On HumbleBench ↗, DualMindVLM achieves the strongest overall performance, suggesting that thinking less when appropriate can be just as important as reasoning more.

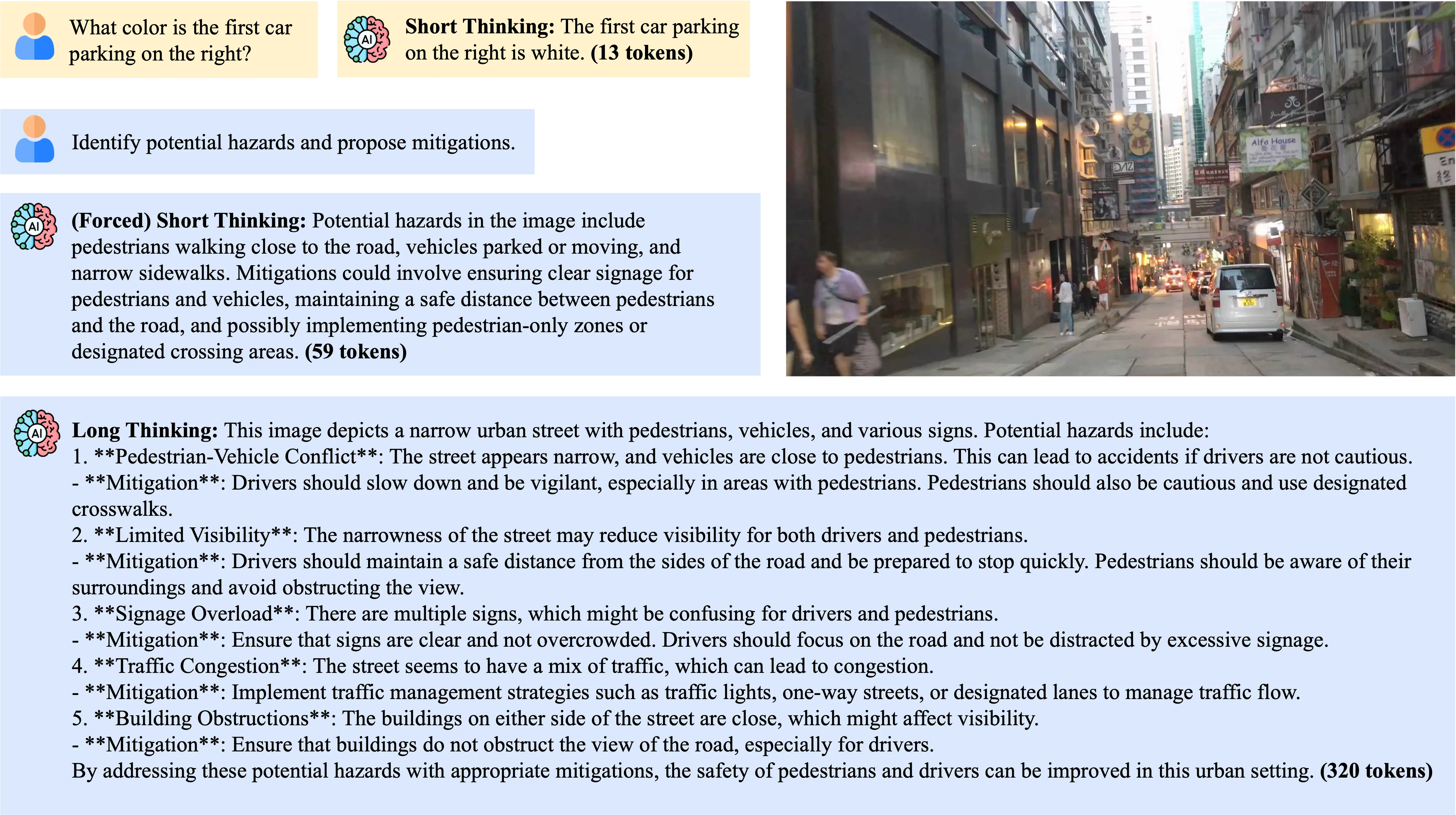

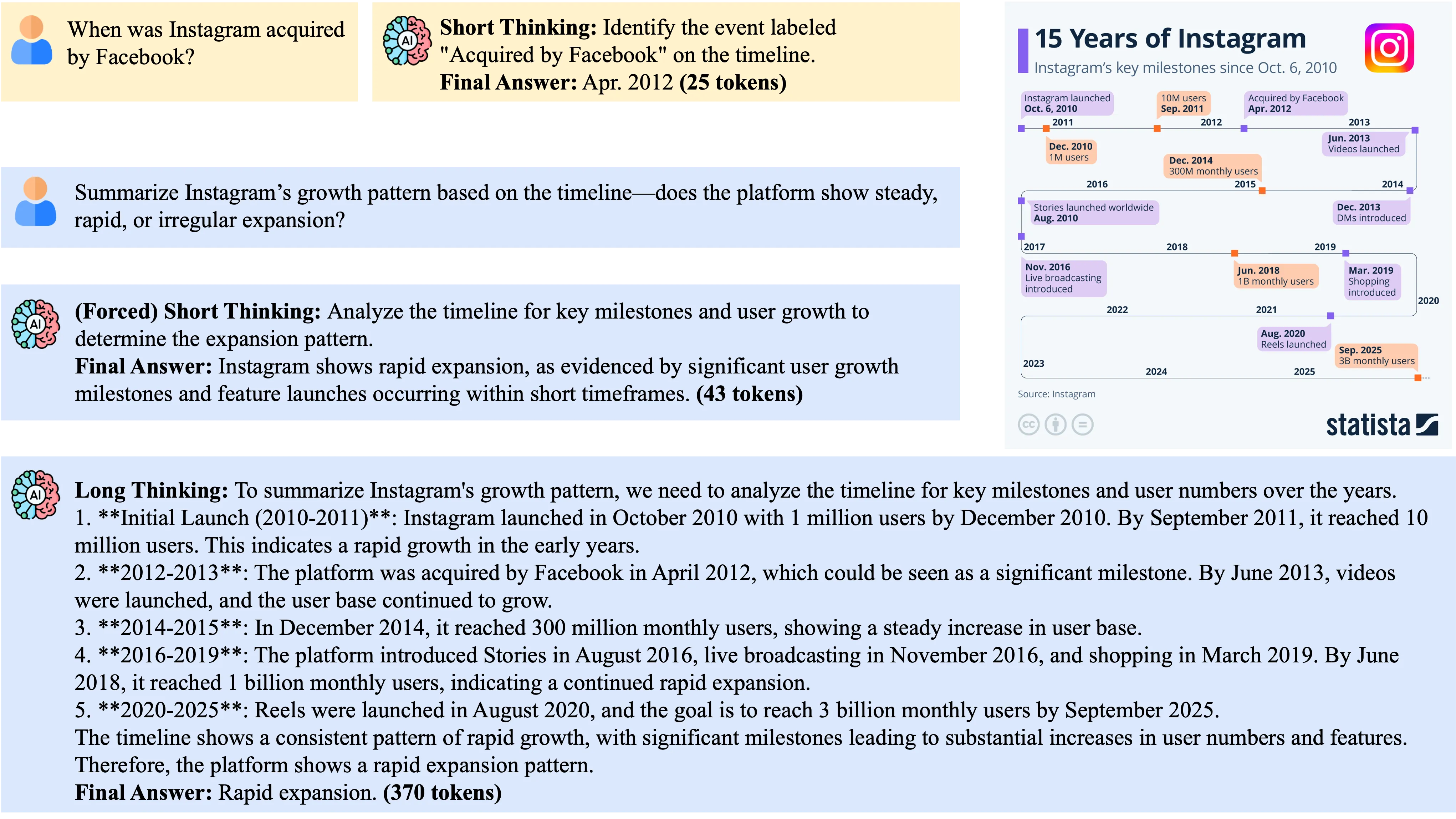

Below we show some applications of DualMindVLM in visual understanding. The examples are better viewed with zoom-in.

Example 1 - Analyzing driving:

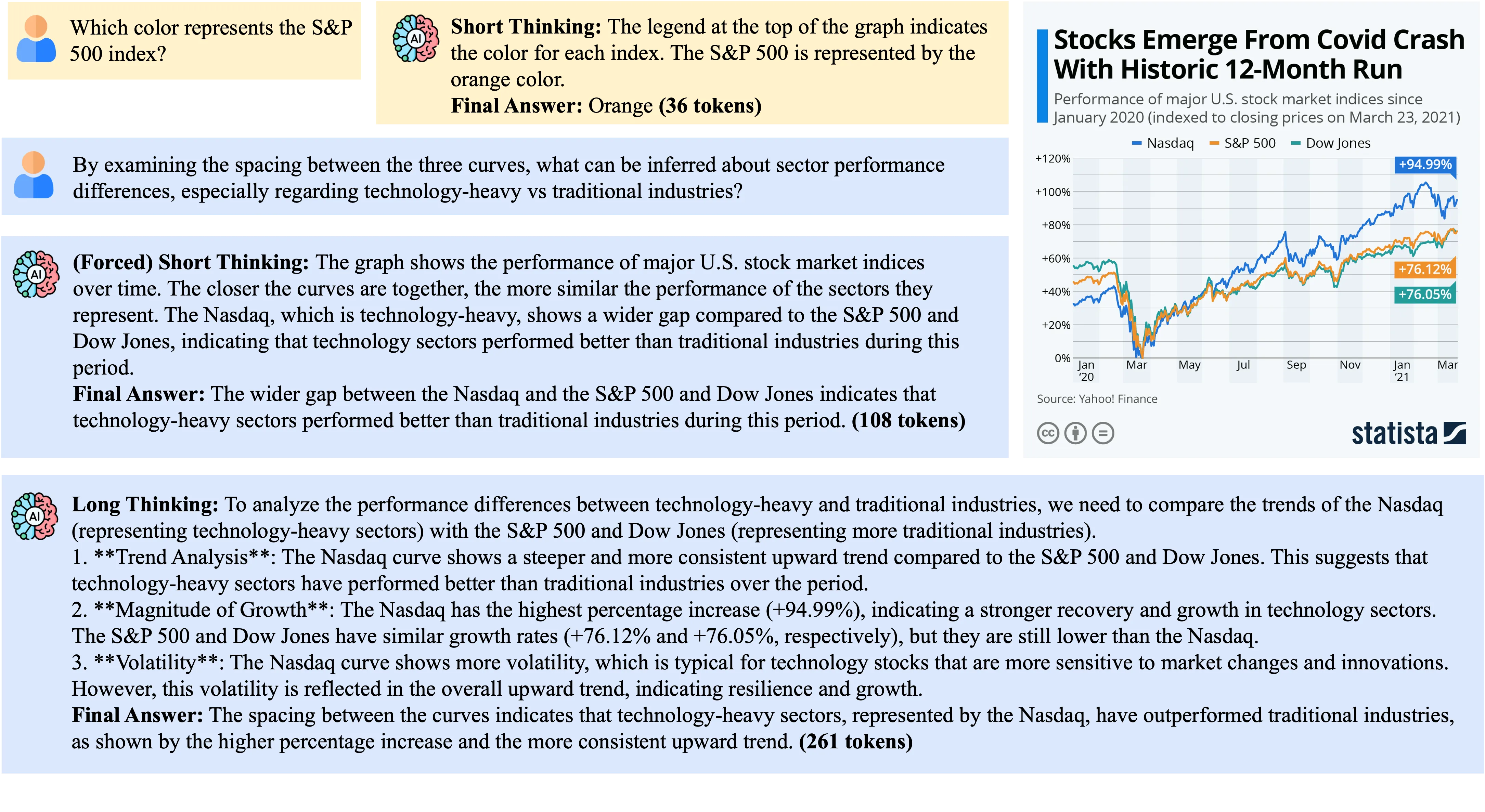

Example 2 - Analyzing infographic:

Example 3 - Analyzing stock chart:

Example 4 - AI Assistant:

Example 5 - Embodied AI:

Summary#

DualMindVLM shows that better reasoning does not come from thinking more, but from thinking appropriately. By using a model’s own output length to supervise training and a simple hybrid RL strategy to teach mode switching, it learns to answer quickly when the problem is easy and reason thoroughly only when necessary. Inspired by the dual-system nature of human cognition, this efficient approach enables state-of-the-art performance, drastically reduces token usage, and even helps mitigate hallucination. In short, DualMindVLM highlights a new direction for multimodal reasoning: models should learn not just how to think, but when to think.